Selected Publications

Metrics of Motor Learning for Analyzing Movement Mapping in Virtual Reality

D. Yu, M. Cibulskis, E. Mortensen, M. Christensen, J. Bergström (CHI '24) [PDF] [Video]

Virtual reality techniques can modify how physical body movements are mapped to the virtual body. However, it is unclear how users learn such mappings and, therefore, how the learning process may impede interaction. We design new metrics explicitly for VR interactions based on motor learning literature. The new metrics were shown to capture learning behaviors that task completion time, a commonly used metric for learning in HCI, does not. They can also provide new insights into how users adapt to movement mappings and help analyze and improve VR techniques.

D. Yu, M. Cibulskis, E. Mortensen, M. Christensen, J. Bergström (CHI '24) [PDF] [Video]

Virtual reality techniques can modify how physical body movements are mapped to the virtual body. However, it is unclear how users learn such mappings and, therefore, how the learning process may impede interaction. We design new metrics explicitly for VR interactions based on motor learning literature. The new metrics were shown to capture learning behaviors that task completion time, a commonly used metric for learning in HCI, does not. They can also provide new insights into how users adapt to movement mappings and help analyze and improve VR techniques.

Modeling Temporal Target Selection: A Perspective from Its Spatial Correspondence

D. Yu, B. Syiem, A. Irlitti, T. Dingler, E. Velloso, J. Goncalves (CHI '23) [PDF] [Video]

Temporal target selection requires users to wait and trigger the selection input within a bounded time window, with a selection cursor that is expected to be delayed. This task conceptualizes, for example, various game scenarios, such as determining the timing of shooting a projectile towards a moving object. This work explores models that predict "when" users typically perform a selection (i.e., user selection distribution) and their selection error rates in such tasks, contributing new knowledge on user selection behavior.

D. Yu, B. Syiem, A. Irlitti, T. Dingler, E. Velloso, J. Goncalves (CHI '23) [PDF] [Video]

Temporal target selection requires users to wait and trigger the selection input within a bounded time window, with a selection cursor that is expected to be delayed. This task conceptualizes, for example, various game scenarios, such as determining the timing of shooting a projectile towards a moving object. This work explores models that predict "when" users typically perform a selection (i.e., user selection distribution) and their selection error rates in such tasks, contributing new knowledge on user selection behavior.

Optimizing the Timing of Intelligent Suggestion in Virtual Reality

D. Yu, R. Desai, T. Zhang, H. Benko, T. R. Jonker, A. Gupta (UIST '22) [PDF] [Video]

Intelligent suggestions based on target prediction models can enable low-friction input within VR and AR systems. For example, a system could highlight the predicted target and enable a user to select it with a simple click. However, as the probability estimates can be made at any time, it is unclear when an intelligent suggestion should be presented. Earlier suggestions could save a user time and effort but be less accurate, while later ones could be more accurate but save less time and effort. We thus propose a computational framework for determining the optimal timing of intelligent suggestions.

D. Yu, R. Desai, T. Zhang, H. Benko, T. R. Jonker, A. Gupta (UIST '22) [PDF] [Video]

Intelligent suggestions based on target prediction models can enable low-friction input within VR and AR systems. For example, a system could highlight the predicted target and enable a user to select it with a simple click. However, as the probability estimates can be made at any time, it is unclear when an intelligent suggestion should be presented. Earlier suggestions could save a user time and effort but be less accurate, while later ones could be more accurate but save less time and effort. We thus propose a computational framework for determining the optimal timing of intelligent suggestions.

Blending On-Body and Mid-Air Interaction in Virtual Reality

D. Yu, Q. Zhou, T. Dingler, E. Velloso, J. Goncalves (ISMAR '22) [PDF] [Video]

On-body interfaces, which leverage the human body's surface as an input or output platform, can provide new opportunities for designing VR interaction. However, it is unclear how they can best support current VR systems mainly relying on mid-air interaction. We propose BodyOn, a collection of six design patterns that leverage combined on-body and mid-air interfaces to achieve more effective 3D interaction. To complete a VR task, a user may use thumb-on-finger gestures, finger-on-arm gestures, or on-body displays with mid-air input, including hand movement and orientation.

D. Yu, Q. Zhou, T. Dingler, E. Velloso, J. Goncalves (ISMAR '22) [PDF] [Video]

On-body interfaces, which leverage the human body's surface as an input or output platform, can provide new opportunities for designing VR interaction. However, it is unclear how they can best support current VR systems mainly relying on mid-air interaction. We propose BodyOn, a collection of six design patterns that leverage combined on-body and mid-air interfaces to achieve more effective 3D interaction. To complete a VR task, a user may use thumb-on-finger gestures, finger-on-arm gestures, or on-body displays with mid-air input, including hand movement and orientation.

Gaze-Supported 3D Object Manipulation in Virtual Reality

D. Yu, X. Lu, R. Shi, H. N. Liang, T. Dingler, E. Velloso, J. Goncalves (CHI '21) [PDF] [Video]

This work investigates integration, coordination, and transition strategies of gaze and hand input for 3D object manipulation in VR. Specifically, we aim to understand whether incorporating gaze input can benefit VR object manipulation tasks and how it should be combined with hand input for improved usability and efficiency. We designed and compared four techniques that leverage different combination strategies. For example, ImplicitGaze allows the transition between gaze and hand input without any trigger mechanism like button pressing.

D. Yu, X. Lu, R. Shi, H. N. Liang, T. Dingler, E. Velloso, J. Goncalves (CHI '21) [PDF] [Video]

This work investigates integration, coordination, and transition strategies of gaze and hand input for 3D object manipulation in VR. Specifically, we aim to understand whether incorporating gaze input can benefit VR object manipulation tasks and how it should be combined with hand input for improved usability and efficiency. We designed and compared four techniques that leverage different combination strategies. For example, ImplicitGaze allows the transition between gaze and hand input without any trigger mechanism like button pressing.

Fully-Occluded Target Selection in Virtual Reality

D. Yu, Q. Zhou, J. Newn, T. Dingler, E. Velloso, J. Goncalves (TVCG '20) [PDF] [Video]

The presence of fully-occluded targets is common within virtual environments, ranging from a virtual object located behind a wall to a data point of interest hidden in a complex visualization. However, efficient input techniques for finding and selecting these targets are mostly underexplored in VR systems. In this research, we developed ten techniques for fully-occluded target selection in VR and evaluated their performance through two user studies. We further demonstrated how the techniques could be applied to real application scenarios.

D. Yu, Q. Zhou, J. Newn, T. Dingler, E. Velloso, J. Goncalves (TVCG '20) [PDF] [Video]

The presence of fully-occluded targets is common within virtual environments, ranging from a virtual object located behind a wall to a data point of interest hidden in a complex visualization. However, efficient input techniques for finding and selecting these targets are mostly underexplored in VR systems. In this research, we developed ten techniques for fully-occluded target selection in VR and evaluated their performance through two user studies. We further demonstrated how the techniques could be applied to real application scenarios.

Modeling Endpoint Distribution of Pointing Selection Tasks in Virtual Reality Environments

D. Yu, H. N. Liang, X. Lu, K. Fan, B. Ens (TOG '19) [PDF] [Video]

Understanding the endpoint distribution of pointing selection tasks can reveal underlying patterns in how users acquire a target. We introduce EDModel, a novel endpoint distribution model which can predict how endpoint distributes when selecting targets with different characters (width, distance, and depth) in virtual reality environments. We demonstrate three applications of EDModel and open-source our experiment data for future research purposes.

D. Yu, H. N. Liang, X. Lu, K. Fan, B. Ens (TOG '19) [PDF] [Video]

Understanding the endpoint distribution of pointing selection tasks can reveal underlying patterns in how users acquire a target. We introduce EDModel, a novel endpoint distribution model which can predict how endpoint distributes when selecting targets with different characters (width, distance, and depth) in virtual reality environments. We demonstrate three applications of EDModel and open-source our experiment data for future research purposes.

Design and Evaluation of Visualization Techniques of Off-Screen and Occluded Targets in Virtual Reality Environments

D. Yu, H. N. Liang, K. Fan, H. Zhang, C. Fleming, K. Papangelis (TVCG '19) [PDF]

Locating targets of interest in a 3D environment becomes problematic when the targets reside outside the user’s view or are occluded by other objects (e.g., buildings). This research explored the design and evaluation of five visualization techniques (3DWedge, 3DArrow, 3DMinimap, Radar, and 3DWedge+). Based on the results of the two user studies, we provide a set of recommendations for designing visualization techniques for off-screen and occluded targets in 3D space.

D. Yu, H. N. Liang, K. Fan, H. Zhang, C. Fleming, K. Papangelis (TVCG '19) [PDF]

Locating targets of interest in a 3D environment becomes problematic when the targets reside outside the user’s view or are occluded by other objects (e.g., buildings). This research explored the design and evaluation of five visualization techniques (3DWedge, 3DArrow, 3DMinimap, Radar, and 3DWedge+). Based on the results of the two user studies, we provide a set of recommendations for designing visualization techniques for off-screen and occluded targets in 3D space.

PizzaText: Text Entry for Virtual Reality Systems Using Dual Thumbsticks

D. Yu, K. Fan, H. Zhang, D. V. Monteiro, W. Xu, and H. N. Liang (TVCG '18) [PDF] [Video]

PizzaText is a circular keyboard layout technique for text entry in virtual reality systems that uses the dual thumbsticks of a hand-held game controller. Users can quickly enter text using this circular keyboard layout by rotating the two joysticks of a game controller. This technique makes text entry simple, easy, and efficient, even for novice users. The results show that novice users can achieve an average of 8.59 Words per Minute, while expert users can reach 15.85 WPM with just two hours of training.

D. Yu, K. Fan, H. Zhang, D. V. Monteiro, W. Xu, and H. N. Liang (TVCG '18) [PDF] [Video]

PizzaText is a circular keyboard layout technique for text entry in virtual reality systems that uses the dual thumbsticks of a hand-held game controller. Users can quickly enter text using this circular keyboard layout by rotating the two joysticks of a game controller. This technique makes text entry simple, easy, and efficient, even for novice users. The results show that novice users can achieve an average of 8.59 Words per Minute, while expert users can reach 15.85 WPM with just two hours of training.

PhD Thesis

PhD Thesis

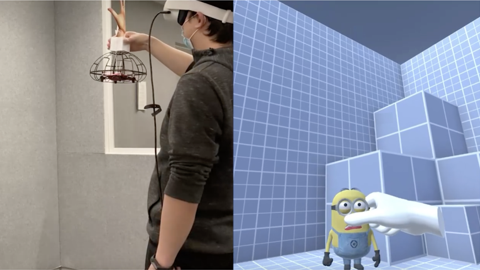

VR Haptic Drone

VR Haptic Drone

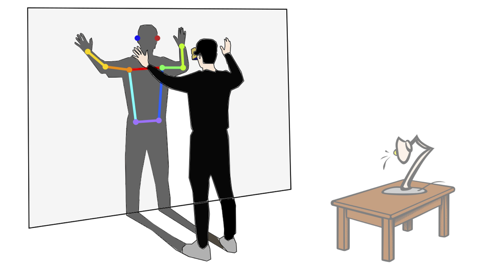

ShadowDancXR

ShadowDancXR

RL for StarCraft 2

RL for StarCraft 2

On-Pet Interaction

On-Pet Interaction

VRHome

VRHome

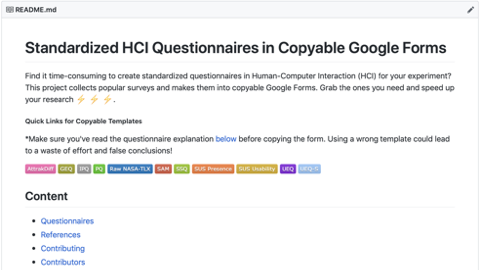

CopyQues

CopyQues

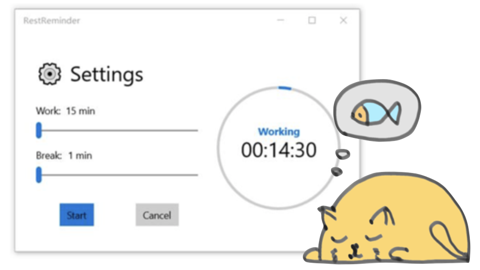

Rest Reminder

Rest Reminder